Circuit breakers

Circuit breaking is a resiliency mechanism that temporarily stops requests to a downstream service when it is detected to be unhealthy. It helps prevent cascading failures by blocking traffic to services that are overloaded, unresponsive, or returning too many errors. Once the service recovers, the circuit breaker automatically resumes traffic.

Because circuit breaking is implemented between services in the ambient mesh, and the configuration is generally specified by the downstream service owner, it can also be thought of as an implementation of the load shedding pattern.

In this guide you will learn how to set up and monitor circuit breakers in your ambient mesh.

Prerequisites

You should have a running Kubernetes cluster with Istio installed in ambient mode. Ensure your default namespace is added to the ambient mesh:

$ kubectl label ns default istio.io/dataplane-mode=ambientDeploy sample services

To test circuit breaking, you will deploy a service, httpbin, and two clients: curl, which lets you send a single request, and fortio, a load testing tool, which provides the ability to specify a number of requests to send and a concurrency level.

$ kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/httpbin/httpbin.yaml

$ kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/curl/curl.yaml

$ kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/httpbin/sample-client/fortio-deploy.yamlDeploy a waypoint

When configuring circuit breaking, you are configuring aspects of a proxy as it makes calls to a target service. For example, you will be configuring HTTP maximum requests per connection. Because this is a layer 7 attribute, it requires the deployment of a waypoint proxy.

If you don’t already have a waypoint installed for the default namespace, install one:

$ istioctl waypoint apply -n default --enroll-namespace --waitFor more information on using waypoints, see Configuring waypoint proxies.

Turn on waypoint logging

Waypoint access logging is off by default, and can be turned on using Istio’s Telemetry API.

So that you can inspect the logs and see requests returning a 503 response code, turn on logging for the waypoint:

$ kubectl apply -f - <<EOF

---

apiVersion: telemetry.istio.io/v1

kind: Telemetry

metadata:

name: enable-access-logging

namespace: default

spec:

accessLogging:

- providers:

- name: envoy

EOFVerify that requests traverse the waypoint

In one terminal, display the waypoint’s logs:

$ kubectl logs --follow deploy/waypointIn a second terminal, run the following command:

$ kubectl exec deploy/curl -- curl -s httpbin:8000/getThis should produce a log entry resembling this:

[2024-11-27T09:11:46.542Z] "GET /get HTTP/1.1" 200 - via_upstream - "-" 0 369 9 8 "-" "curl/8.11.0" "a66c07a3-ba39-448f-a7e6-a83d1e1bc9ca" "httpbin:8000" "envoy://connect_originate/10.244.2.24:8080" inbound-vip|8000|http|httpbin.default.svc.cluster.local envoy://internal_client_address/ 10.96.127.222:8000 10.244.1.4:46546 - default

Everything is now in place to test circuit breaking. Keep the logs from the waypoint open; you will refer to them again.

Generate an initial load

Before configuring circuit breaking, use Fortio to send 100 requests to httpbin and observe the results:

$ kubectl exec deploy/fortio-deploy -- fortio load -c 3 -qps 0 -n 100 \

-quiet http://httpbin:8000/getHere is a quick summary of the flags used in the above command:

-c 3: use three concurrent threads, so you can send three concurrent requests at a time-qps 0: queries per second. A value of zero means don’t introduce any latency between calls, send them as fast as possible-n: the number of requests to send to the target endpoint-quiet: make the output of the command a little less verbose

Confirm that the output of the execution shows that all 100 requests returned with Code 200 (Success):

Code 200 : 100 (100.0 %)

You will see the log entries in your waypoint access log:

[2024-11-27T09:12:06.691Z] "GET /get HTTP/1.1" 200 - via_upstream - "-" 0 347 0 0 "-" "fortio.org/fortio-1.66.5" "9ebcf627-0616-49ae-a7c0-49ded0720075" "httpbin:8000" "envoy://connect_originate/10.244.2.24:8080" inbound-vip|8000|http|httpbin.default.svc.cluster.local envoy://internal_client_address/ 10.96.127.222:8000 10.244.2.25:57528 - default

Configure circuit breaking

A DestinationRule configures post-routing behavior of traffic to a service. This is where a service owner will configure the circuit breaking settings for that service.

Review and apply the following rule, which configures calls to httpbin with artificially low thresholds:

$ kubectl apply -f - <<EOF

---

apiVersion: networking.istio.io/v1

kind: DestinationRule

metadata:

name: httpbin

spec:

host: httpbin.default.svc.cluster.local

trafficPolicy:

connectionPool:

tcp:

maxConnections: 1

http:

http1MaxPendingRequests: 1

maxRequestsPerConnection: 1

EOFThe waypoint will be configured to use a connection pool with a single connection, a maximum of one HTTP request per connection, and a pending requests queue with a maximum size of one. Refer to the Istio reference documentation for more information.

These thresholds are set artificially low for this example. In practice, as Michael Nygard states in his book Release it!:

The ideal way to define “load is too high” is for a service to monitor its own performance relative to its SLA. When requests take longer than the SLA, it’s time to shed some load.

Observe the responses

You will be able to “trip” the circuit breakers by sending in more than a couple of concurrent requests.

The Envoy proxy defines response flags that indicate specific events that occurred in the context of handling of a request.

The UO (UpstreamOverflow) response flag is an indication of circuit breaking, and can be observed in the waypoint’s logs.

With circuit breaking thresholds in place, send another 100 requests:

$ kubectl exec deploy/fortio-deploy -- fortio load -c 3 -qps 0 -n 100 \

-quiet http://httpbin:8000/getThe output should indicate a certain percentage of the requests returning a 503 “Service unavailable”:

Code 200 : 65 (65.0 %)

Code 503 : 35 (35.0 %)

Observe the output from the logs.

Not all responses will show a 200 response.

Here is one of the 503 responses:

[2024-11-27T09:13:39.078Z] "GET /get HTTP/1.1" 503 UO upstream_reset_before_response_started{overflow} - "-" 0 81 0 - "-" "fortio.org/fortio-1.66.5" "6df1d0fb-30cc-4908-b3c4-c9c47ac1bf82" "httpbin:8000" "-" inbound-vip|8000|http|httpbin.default.svc.cluster.local - 10.96.127.222:8000 10.244.2.25:57528 - default

The UO response flag indicates that the circuit breaker tripped and prevented the request from reaching httpbin.

Configure metrics collection

It is important to be able to monitor circuit breaking events. The Envoy proxy can produce many metrics that help monitor its behavior. Overflows are reflected in specific metrics, including: upstream_cx_overflow (connection overflow) and upstream_rq_pending_overflow (request overflow).

By default, Istio configures Envoy to record a minimal set of statistics to reduce the overall CPU and memory footprint. Circuit breaker metrics are not included by default, and you must configure Istio to include them.

Using a Helm values file to configure Istio’s global mesh settings, you can include metrics that pertain to circuit breaking:

---

meshConfig:

defaultConfig:

# enable stats for circuit breakers, request retries, upstream connections, and request timeouts globally:

proxyStatsMatcher:

inclusionRegexps:

- ".*outlier_detection.*"

- ".*upstream_rq_retry.*"

- ".*upstream_rq_pending.*"

- ".*upstream_cx_.*"

inclusionSuffixes:

- "upstream_rq_timeout"Save the above content to a file named mesh-config.yaml.

To configure metrics collection, run the helm upgrade command for the istiod chart, providing the additional configuration file as an argument:

$ helm upgrade istiod istio/istiod --namespace istio-system \

--set profile=ambient \

--values mesh-config.yaml \

--waitThe waypoint must then be restarted in order to pick up the updated configuration:

$ kubectl rollout restart deploy waypointYou are ready to observe some metrics.

Deploy Prometheus and display the metrics

Deploy Prometheus to the cluster:

$ kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/addons/prometheus.yamlSee Configure and view metrics for more information on configuring and viewing metrics.

Once Prometheus is deployed and ready, connect to its dashboard:

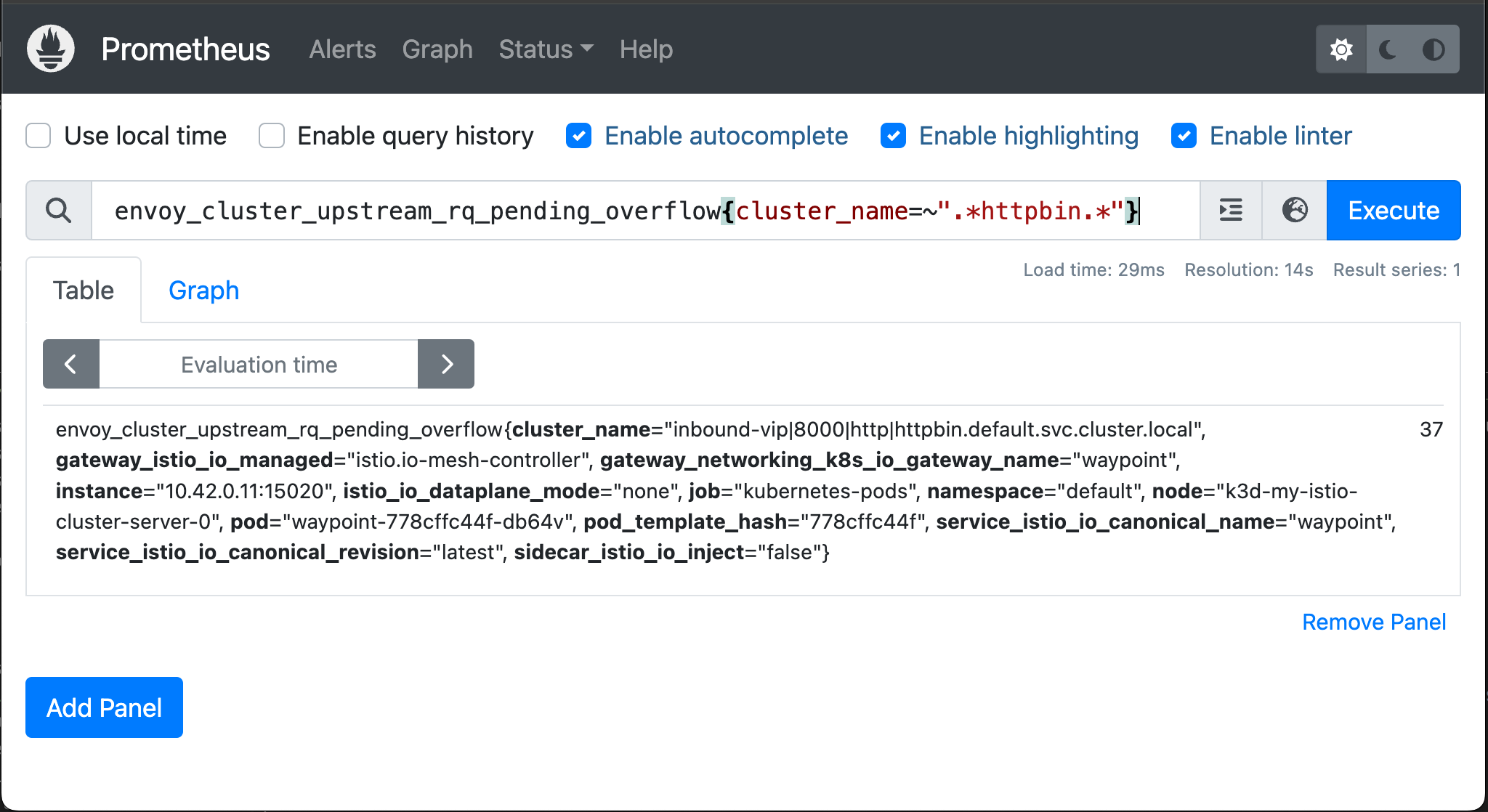

$ istioctl dashboard prometheusThe primary metrics of interest are the pending connection overflow (envoy_cluster_upstream_rq_pending_overflow) and pending request overflow (envoy_cluster_upstream_cx_overflow) counters.

Query the pending connection overflow metrics pertaining to calls destined for httpbin:

envoy_cluster_upstream_rq_pending_overflow{cluster_name=~".*httpbin.*"}

-

Make a note of the current counter value for the metric. (If you have just deployed Prometheus, there will be no query result.)

-

Send in another 100 requests, some of which will fail, and cause the circuit breaker metric counters to increase:

kubectl exec deploy/fortio-deploy -- fortio load -c 3 -qps 0 -n 100 \ -quiet http://httpbin:8000/get -

Wait about 15-20 seconds for Prometheus to make another round of metrics collection from the waypoint, and re-run the Prometheus query. Observe that the metric counts have increased: the number should be the same as the number of

503errors reported by Fortio.

Pending requests overflow counter metric in Prometheus

Query the Envoy admin interface

Another way to look at the metrics collected by a waypoint is to look at the Envoy proxy’s admin interface. Launch it with this command:

$ istioctl dash envoy deployment/waypoint.defaultClick on the “stats” link towards the bottom of the page. In the regular expression field for filtering stats, enter the keyword “overflow”, press Enter, and you should see the metrics in question. For example:

cluster.inbound-vip|8000|http|httpbin.default.svc.cluster.local;.upstream_cx_overflow: 59

cluster.inbound-vip|8000|http|httpbin.default.svc.cluster.local;.upstream_cx_pool_overflow: 0

cluster.inbound-vip|8000|http|httpbin.default.svc.cluster.local;.upstream_rq_pending_overflow: 37

cluster.inbound-vip|8000|http|httpbin.default.svc.cluster.local;.upstream_rq_retry_overflow: 0

Clean up

Delete the Prometheus deployment:

$ kubectl delete -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/addons/prometheus.yamlDelete the DestinationRule:

$ kubectl delete destinationrule httpbinDeprovision the waypoint:

$ istioctl waypoint delete -n default waypointDeprovision the sample applications:

$ kubectl delete -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/httpbin/httpbin.yaml

$ kubectl delete -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/curl/curl.yaml

$ kubectl delete -f https://raw.githubusercontent.com/istio/istio/release-1.26/samples/httpbin/sample-client/fortio-deploy.yaml