Migrating from sidecars to ambient mesh: everything you need to know

Istio’s 2017 announcement invited you to imagine that you could “transparently inject a layer of infrastructure between a service and the network that gives operators the controls they need while freeing developers from having to bake solutions to distributed system problems into their code”. It is worth noting that it does not specifically mention the method by which this vision would be realised. Sidecars were always an implementation detail to us on the Istio team; one that provided the ability to do something which was otherwise so complicated as to be impractical for most users, but not without drawbacks. We experimented with using gRPC as the data plane, for users who were willing and able to make changes to their application, but ultimately found a better expression of our goals in ambient mesh.

With estimates suggesting 10% of all Kubernetes deployments run Istio even before the launch of ambient mesh, there are clearly a lot of people who have found value in sidecars. Now that ambient mode is GA, we want to help those users and teams understand the differences between the two modes, and we hope a substantial portion of them will make the move to ambient mesh.

In this series, we will walk through the architectural differences between sidecar mode and ambient mode that need to be considered in order for that migration to be successful.

Understanding ambient mode

From launch, Istio implemented a set of features that were largely based on the abilities gained by using Envoy sidecars attached to both client and server. The introduction of “ambient mode” necessitated the retrospective marking of that feature set as “sidecar mode”.

The goal of ambient mode is not to replicate the feature set of sidecar mode 100%. Sidecars will exist indefinitely as a feature of Istio, and that enables ambient mode to progress in a modern architectural direction, without carrying the baggage of its past. For example, the method of configuring traffic routing is the Gateway API, as opposed to Istio’s legacy VirtualService API.

There are currently features of Istio that are possible in sidecar mode that are not possible in ambient mode. Some are gaps that will be closed over time, such as multi-cluster and support for VMs — both of which are implemented in Solo’s Solo Enterprise for Istio product, which adds features to upstream Istio.

Other features are not being carried forward. There are many reasons: a lack of usage, an acknowledgement that the API was poorly designed, or an API whose existence is entirely obviated by the new architecture.

Istio maintainer Ian Rudie encourages you to think of ambient mesh as something that is meant to disappear, not just in terms of infrastructure, but in terms of configuration too. It is not the result of trying to rearrange Istio, but rather the result of trying to build the best mesh experience for a Kubernetes user today. Addressing it from this point of view will make it easier to understand why certain changes were made, and how you should change your thinking, if not your configuration, when migrating.

The boxes and the lines

Sidecar mode

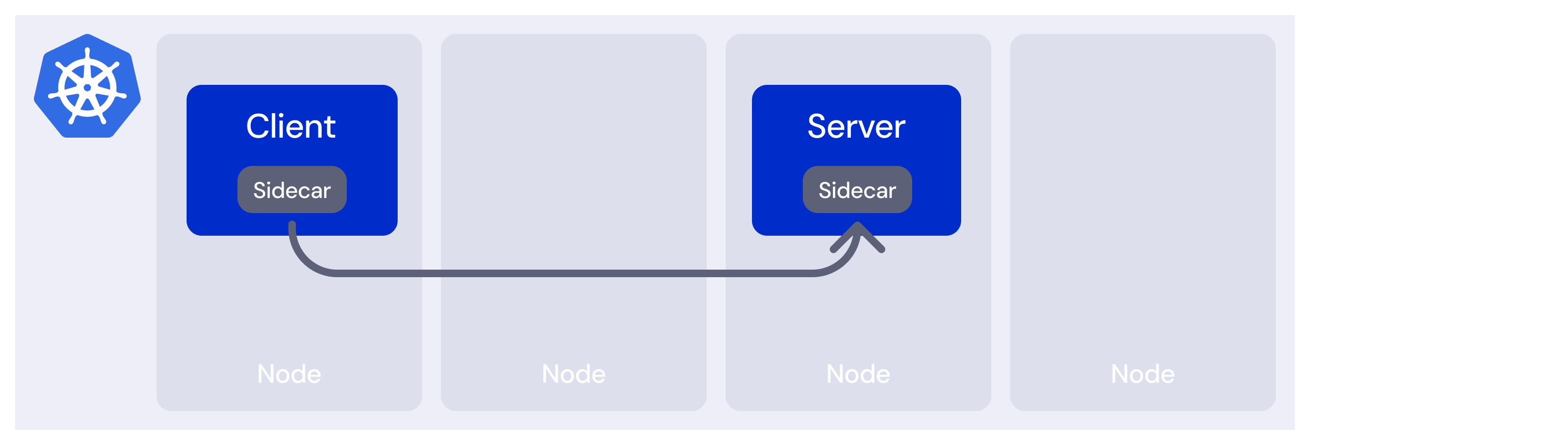

A minimal traditional Istio architecture diagram — with control planes and telemetry infrastructure elided for simplicity — looks like this:

This image contains nodes, in order to contrast it with later ambient mode images, but there is nothing specific about whether the workloads are on the same or different nodes.

Each workload has an embedded sidecar, which allows us to express an intention to the control plane, and have it enforced by either the client or the server sidecar, depending on where in the request path that policy should be applied.

To deploy sidecars, we have not changed the application, but we have changed the workload. This distinction lies at the heart of the ambient mesh value proposition: no matter how low-impact you can make your sidecar, you can’t solve this layering problem with sidecars.

Which sidecar does what?

| Client-side | Server-side | Both |

|---|---|---|

| Traffic management | Authorization/authentication | Telemetry |

| Load balancing | WebAssembly | |

| Connection pooling | ||

| mTLS |

Most of this is obvious, when you think about it. Load balancing is performed client-side, because a Kubernetes Service can refer to multiple backends, and the sidecar needs to choose a single backend to connect to. Security is performed server-side, or else a malicious client could simply opt not to perform checks.

In networking, we refer to the source of a request as the client and the destination as the server. In the world of API management, we might refer to the client being controlled by a consumer and the server being operated by a producer.

Ambient mode

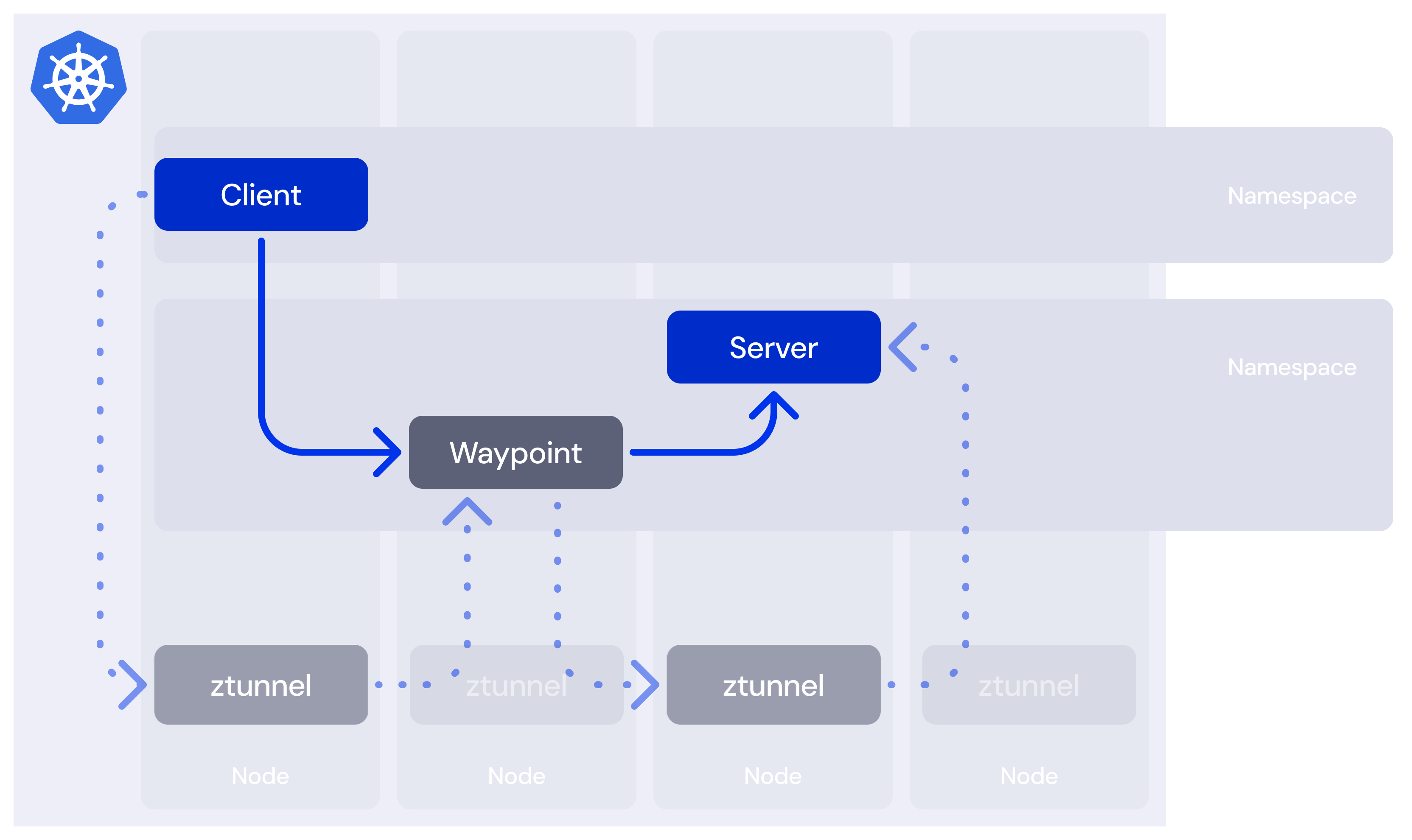

Ambient mode removes all the sidecars (in a structured fashion - more on that later) and replaces the functionality with ztunnel (client- and server-side) and waypoint proxies (server-side only). That sentence will require many hundreds more words to unpack, but the good news is that we end up with a much simpler mental model at the end.

Here, we show the use of a waypoint for our server. In order for waypoints to be able to enforce security policy, they exist as a server-side deployment, and for ease of accounting we usually see them deployed in the same namespace as the workloads they protect.

Moving processing from consumer to producer

Unlike server-side sidecars, our waypoint proxy exists separately from any single workload. That allows us to reduce our Layer 7 processing from two steps (one on the client sidecar, one on the server) to just one, with an important caveat: we now implement load balancing on the server side.

This more cleanly separates the concerns of the consumer and the producer, in that the producer is best placed to know how to care for their service (expressed through the DestinationRule continuing to service a purpose even when using the Gateway API). It also means we move the cost of any processing from “all consumers” to the producer.

A more important win is that a waypoint only needs to know about the configuration of its namespace, whereas a sidecar has to know about every endpoint in the mesh. This change alone is responsible for serious savings at scale, and completely removes the need for manual configuration scoping.

To waypoint or not to waypoint?

Sure. moving to one Layer 7 processing step is nice. Can we get down to zero?

Far and away the most common use case for service mesh today is adding mTLS to Kubernetes. Thus, it bears repeating: you do not need to use waypoints. They are optional! They add a number of good features, but you should consider whether or not you need or want those features. You can make that consideration on a per-workload basis.

Ambient mesh with waypoints offers you all the administrative advantages, and operates at a comparable cost to sidecars — hopefully a few percentage points less. Ambient mesh without waypoints blows any other approach out of the water, while offering more throughput than even in-kernel solutions.

In the next post, we’ll look at what you, as an operator, will do differently when you’re running in ambient mode.