Building the Optimal Service Mesh Experience

Microservices and distributed application architectures have been proven to have many benefits over monolithic applications and traditional infrastructure. Some of these benefits include:

- Faster time to market for new features and upgrades

- Independently scalable components and better resource utilization

- Geographically distributed applications

- Highly resilient applications for better customer experience

- Flexibility in deployment - on-premises, cloud, and hybrid

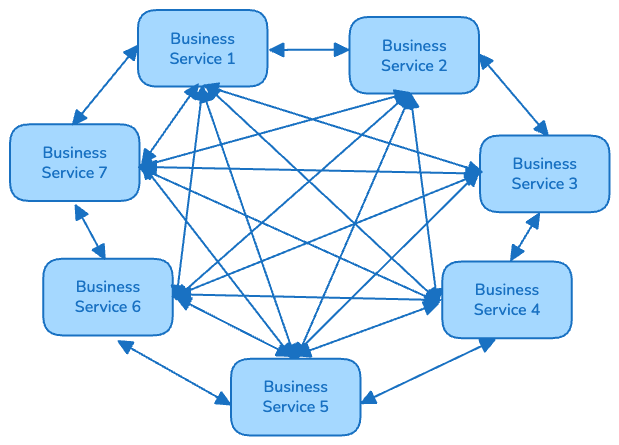

These benefits don’t come without costs though. There is inherent complexity introduced with microservices and distributed architectures. One of the challenges introduced is that what used to be in-memory communications with monolithic applications are now network communications between services. This presents a number of new concerns:

- Increased security blast radius

- Service discovery and routing

- Fault tolerance

- Observability

- Workload identification

Simple Service Graph

Netflix Service Graph

A service mesh aims to address these challenges. A service mesh provides encryption, service discovery, observability, and complex routing to free up application development teams to focus on solving business problems. A service mesh moves these concerns to the infrastructure layer as a platform component, and abstracts the implementation from application teams.

In this post, we will look at challenges associated with introducing a service mesh and how to provide a best-in-class experience for developers and platform operators.

The Optimal Service Mesh

So what does an optimal service mesh look like? There are two different personas that need to be considered in the life of a service mesh:

- Platform Engineer - The persona responsible for providing a high-quality platform product to support application development and operations.

- Application Developer - The persona that consumes platform services to enable high-velocity application development and frequent releases.

Each of these personas have different needs.

Platform Engineer

The platform engineer needs to provide a secure, performant, resilient, and scalable platform to their customers, the application developers. The platform needs to provide value to the developers and is ever-evolving. A service mesh should contribute to the following in the platform:

- Security

- Observability

- Resiliency

- Scalability

- Multi-tenancy

- Policy, control, and auditability

Application Developer

The application developer is responsible for creating valuable software for the business. The developer is constantly working through a backlog of features to continually innovate the business (their customers). Application developers leverage the platform to reduce undifferentiated work that doesn’t contribute directly to business value. A service mesh should provide these features to the application developer:

- Advanced routing and traffic management

- Simplified authentication and authorization

- Self-service with low friction

- Observability

- Service registration and discovery

- Simple controls for progressive delivery practices

Istio Ambient Mesh

Istio is the most well known and widely used service mesh. Launched in 2017, Istio is now part of the Cloud Native Computing Foundation (CNCF) and broadly adopted by organizations of all sizes.

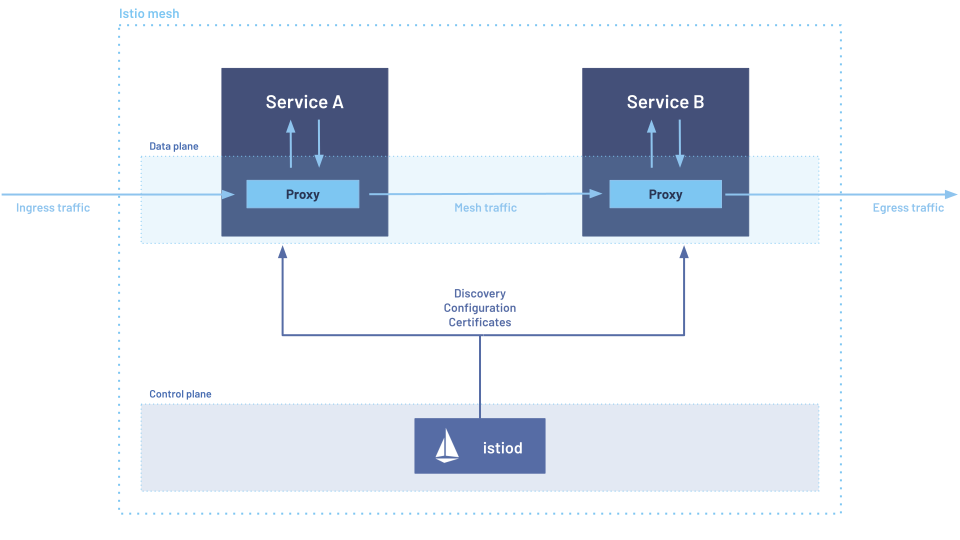

Istio operates with two layers: a data plane that controls all communication between services, and a control plane that configures and manages the data plane. Historically the data plane was implemented by injecting ‘sidecar’ proxies into each running workload. The pod’s iptables is modified to redirect all inbound and outbound traffic through the proxy.

Istio Sidecar Architecture

The sidecar architecture is a proven solution, but comes at a cost. Since every workload needs a proxy injected into it, it adds a lot of overhead in terms of infrastructure, operations, and complexity.

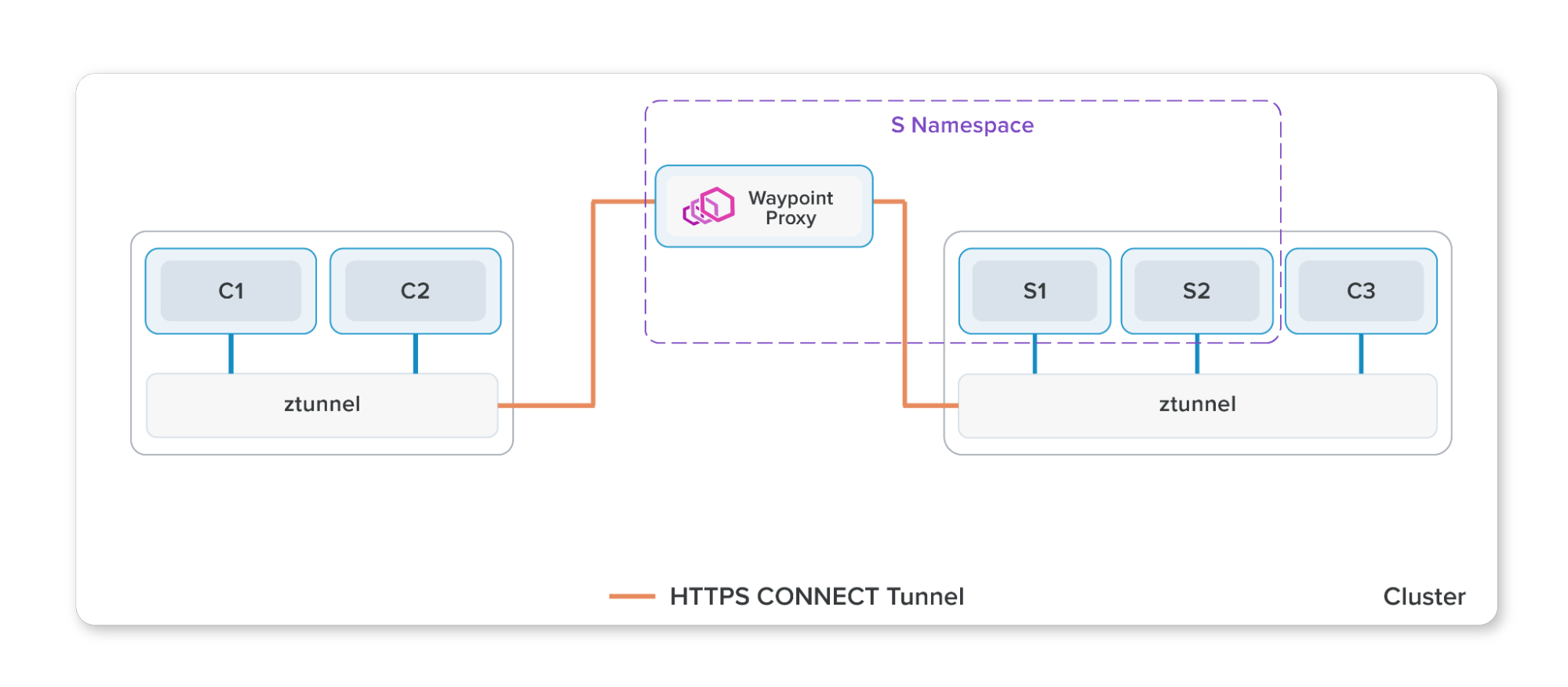

To address this a new Ambient mode was recently introduced. With Ambient mode, the proxy responsibility is split into two components: OSI Layer 4 functions such as encryption and peer authorization are handled by a node-level proxy called ztunnel, and OSI Layer 7 features like HTTP routing and header manipulation are performed by a proxy called a Waypoint. Waypoints are typically deployed per namespace.

Istio Ambient Mode

Ambient mode drastically reduces the infrastructure and operations required for Istio. By splitting Layer 4 and Layer 7 functions, you can selectively deploy Waypoints as needed. And deploying ztunnel proxies per node instead of per workload significantly reduces the infrastructure required for the data plane.

Ambient Mesh for Platform Engineers

Ambient Mesh helps Platform Engineers meet their goals in many ways.

Security and Policy Enforcement

Ambient Mesh automatically enables mutual TLS (mTLS) encryption between workloads, and mTLS includes the workload identity. mTLS prevents man-in-the-middle attacks and allows for fine-grained access control policies. Ambient Mesh can also enforce Layer 7 policies, such as OAuth flows and limiting HTTP methods. Ambient Mesh’s ztunnel and Waypoint proxy act as a Policy Enforcement Point for the service mesh.

Observability

Since all traffic is controlled and monitored by Ambient Mesh, it has access to a rich set of telemetry data for your workloads. This telemetry data can then be ingested by observability tools. Distributed tracing tools can also give insight into issues in service graphs by integrating with Ambient Mesh.

Multi-tenancy

Ambient Mesh provides a rich set of policy controls to manage and isolate multiple tenants within a service mesh. You can control access to resources with APIs such as authorization policies. Peer identities can be inspected, validated, and authorized. And for enterprise-grade multi-tenancy, Solo Enterprise for Istio allows you to span multiple Kubernetes clusters to implement a cluster-per-tenant model.

Resilience

Ambient Mesh enables workloads to become more resilient to network latencies and upstream service failures. Ambient Mesh provides features such as retries, timeouts, and circuit breaking to enable applications to gracefully handle upstream issues and network problems.

Ambient Mesh for Application Developers

Ambient Mesh also assists Application Developers in meeting their goals, by providing a number of features that would otherwise require developers to build by themselves, and do not directly contribute to the creation of business value.

Traffic Management

Ambient Mesh has a large set of APIs to route, split, authorize, and modify traffic to services within the mesh. Developers can control routing and load balancing of traffic. They can do more advanced traffic management such as splitting traffic between versions of services or mirroring traffic to different services. HTTP header values can be inspected, validated, and modified with Ambient Mesh. And resilience features such as circuit breaking can be controlled by development teams.

Service Registration and Discovery

Service registration and discovery can be a tedious task to implement and maintain for developers. Ambient Mesh automatically maintains a registry of services and endpoints for services in the mesh. Services outside of the service mesh can be managed using the Service Entry API, allowing developers to augment the service registry.

Progressive Delivery

Progressive delivery is a practice of delivering software to subsets of users, incrementally delivering features in a controlled, low-risk manner. Techniques such as canary deployments, percentage-based rollouts, A/B testing, and feature flags are all common techniques that require the inspection and manipulation of routes and headers in an automated fashion. Ambient Mesh’s traffic management APIs can be easily integrated with popular tools such as Argo Rollouts to drastically reduce the effort of adopting progressive delivery methods.

Self-service

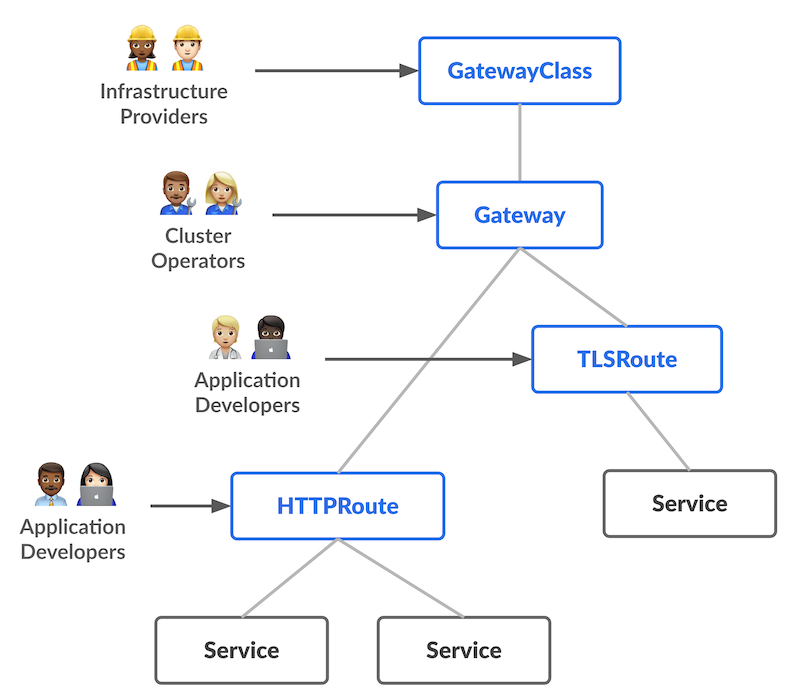

The Kubernetes Gateway API was designed to be deeply role-oriented; organizational roles are expected to use and configure different resources that are in their domain. It is designed so that developers manage the routing logic for their applications, and associate these routes with gateways managed by platform teams.

Kubernetes Gateway API model

Ambient Mesh is based on the Kubernetes Gateway API and its extensibility framework. Because of this, developers are able to manage traffic routing and manipulation in a declarative, self-service model, without relying on ticket based systems to implement routing logic.

Conclusion

As you can see, Ambient Mesh represents a monumental shift in how a service mesh can be adopted by organizations. When designed correctly Ambient Mesh brings a host of benefits to users:

- Drastically reduced operational burden for platform teams, compared to sidecar-based meshes.

- Significant reduction in infrastructure requirements for a service mesh.

- Secure-by-default model, providing mTLS, workload identity, and authorization policies out of the box.

- Complex traffic management for application developers, with declarative, self-service configuration.

We invite you to get started with Ambient Mesh today! Here are ways that you can get involved:

- Check out the documentation for Ambient Mesh.

- Follow one of our hands-on labs.

- Install Ambient Mesh in a test cluster.

- Check out the enterprise features in Solo Enterprise for Istio.